A simple cp clone in Rust: Trials and tribulations

As i am preparing the script for the next video (here’s the last one) i had to compare the performance of command line, vanilla cp with a quick clone that i wrote in Rust. Here’s the first attempt:

fn copy_with_read_write(source_path: &PathBuf, target_path: &PathBuf, blocksize: usize) {

let source = OpenOptions::new().read(true).open(source_path).unwrap();

let target = OpenOptions::new()

.write(true)

.truncate(true)

.create(true)

.open(target_path)

.unwrap();

unsafe {

libc::posix_fadvise64(source.as_raw_fd(), 0, 0, POSIX_FADV_SEQUENTIAL);

}

let mut last_read = -1;

let mut buf = Vec::with_capacity(blocksize);

while last_read != 0 {

last_read = unsafe { read(source.as_raw_fd(), buf.as_mut_ptr(), blocksize) };

unsafe {

write(target.as_raw_fd(), buf.as_mut_ptr(), last_read as size_t);

};

}

}

Pretty straightforward if you ask me. Do the fadvise(SEQUENTIAL) just like cp does, then read a few blocks at a time (32 in this case) and write them out. All through system calls from the libc crate. That should be really, really close to cp performance, right?

Right?

Let’s see:

chris@desktop:~/sources/codetales/code_tales/episode012$ perf stat target/release/copy -b 131072 sync

Performance counter stats for 'target/release/copy -b 131072 sync':

1 826,37 msec task-clock # 0,898 CPUs utilized

457 context-switches # 250,223 /sec

7 cpu-migrations # 3,833 /sec

120 page-faults # 65,704 /sec

7 532 509 609 cycles # 4,124 GHz (83,28%)

1 046 865 815 stalled-cycles-frontend # 13,90% frontend cycles idle (83,33%)

2 187 920 015 stalled-cycles-backend # 29,05% backend cycles idle (83,35%)

8 444 079 822 instructions # 1,12 insn per cycle

# 0,26 stalled cycles per insn (83,25%)

1 726 426 151 branches # 945,278 M/sec (83,44%)

10 863 305 branch-misses # 0,63% of all branches (83,35%)

2,034570931 seconds time elapsed

0,000000000 seconds user

1,825130000 seconds sys

chris@desktop:~/sources/codetales/code_tales/episode012$ perf stat cp chunk chunk_copy

Performance counter stats for 'cp chunk chunk_copy':

1 699,44 msec task-clock # 0,918 CPUs utilized

803 context-switches # 472,508 /sec

13 cpu-migrations # 7,650 /sec

139 page-faults # 81,792 /sec

7 181 381 838 cycles # 4,226 GHz (83,20%)

1 019 720 977 stalled-cycles-frontend # 14,20% frontend cycles idle (83,52%)

1 970 764 871 stalled-cycles-backend # 27,44% backend cycles idle (83,55%)

8 427 695 142 instructions # 1,17 insn per cycle

# 0,23 stalled cycles per insn (83,34%)

1 714 133 836 branches # 1,009 G/sec (83,11%)

10 712 345 branch-misses # 0,62% of all branches (83,27%)

1,851921938 seconds time elapsed

0,007983000 seconds user

1,688481000 seconds sys

Nope. There is a clear gap and, after multiple runs, it’s consistent. The Rust version clearly executes more commands, which to me seems to be the main problem.

But where could they be coming from? The loop is extremely tight and i compile for release. The only place that could be a problem is…

…no. Could it be? Is it the as_raw_fd() and as_mut_ptr()?

Worth a try.

Here’s the second attempt:

fn copy_with_read_write(source_path: &PathBuf, target_path: &PathBuf, blocksize: usize) {

let source = OpenOptions::new().read(true).open(source_path).unwrap().as_raw_fd();

let target = OpenOptions::new()

.write(true)

.truncate(true)

.create(true)

.open(target_path)

.unwrap().as_raw_fd();

unsafe {

libc::posix_fadvise64(source, 0, 0, POSIX_FADV_SEQUENTIAL);

}

let mut last_read = -1;

let mut buf = Vec::with_capacity(blocksize).as_mut_ptr();

while last_read != 0 {

last_read = unsafe { read(source, buf, blocksize) };

if last_read == -1 {

println!("{}", Error::last_os_error());

return;

}

unsafe {

write(target, buf, last_read as size_t);

};

}

}

Just stop calling those two as_ methods in the loop. I’d be surprised if that made a difference though, my expectation is that the compiler would optimize those away.

Turns out, i was surprised. This method compiles just fine, but read() fails spectacularly:

chris@desktop:~/sources/codetales/code_tales/episode012$ target/release/copy -b 131072 sync

Bad file descriptor (os error 9)

That’s right after the first time we read(). Ok, it didn’t like that, for some reason. Let’s just keep the buffer pointer then:

fn copy_with_read_write(source_path: &PathBuf, target_path: &PathBuf, blocksize: usize) {

let source = OpenOptions::new().read(true).open(source_path).unwrap();

let target = OpenOptions::new()

.write(true)

.truncate(true)

.create(true)

.open(target_path)

.unwrap();

unsafe {

libc::posix_fadvise64(source.as_raw_fd(), 0, 0, POSIX_FADV_SEQUENTIAL);

}

let mut last_read = -1;

let mut buf = Vec::with_capacity(blocksize).as_mut_ptr();

while last_read != 0 {

last_read = unsafe { read(source.as_raw_fd(), buf, blocksize) };

if last_read == -1 {

println!("{}", Error::last_os_error());

return;

}

unsafe {

write(target.as_raw_fd(), buf, last_read as size_t);

};

}

}

Still doesn’t work, this time with a buffer error:

chris@desktop:~/sources/codetales/code_tales/episode012$ target/release/copy -b 131072 sync

Bad address (os error 14)

Now this doesn’t make any sense to me. Why would calling the as_ methods in the loop work, but outside it fails?

No matter, i don’t need to understand it to hack around it. I will assume that something happens between getting and first-use of the raw fd and the buffer pointer. If that’s true, i can do one use outside the loop, then get the raw fd and the buffer pointer in a variable and use that in the loop, avoiding calling the methods again and again. Here’s what that ended up looking like:

fn copy_with_read_write(source_path: &PathBuf, target_path: &PathBuf, blocksize: usize) {

let source = OpenOptions::new().read(true).open(source_path).unwrap();

let target = OpenOptions::new()

.write(true)

.truncate(true)

.create(true)

.open(target_path)

.unwrap();

unsafe {

libc::posix_fadvise64(source.as_raw_fd(), 0, 0, POSIX_FADV_SEQUENTIAL);

}

let mut last_read = -1;

let mut buf = Vec::with_capacity(blocksize);

last_read = unsafe { read(source.as_raw_fd(), buf.as_mut_ptr(), blocksize) };

unsafe {

write(target.as_raw_fd(), buf.as_mut_ptr(), last_read as size_t);

};

let ptr = buf.as_mut_ptr();

let src = source.as_raw_fd();

let trgt = target.as_raw_fd();

while last_read != 0 {

last_read = unsafe { read(src, ptr, blocksize) };

unsafe {

write(trgt, ptr, last_read as size_t);

};

}

}

This time it worked and i could get a perf result:

chris@desktop:~/sources/codetales/code_tales/episode012$ perf stat target/release/copy -b 131072 sync

Performance counter stats for 'target/release/copy -b 131072 sync':

1 616,56 msec task-clock # 0,912 CPUs utilized

666 context-switches # 411,986 /sec

7 cpu-migrations # 4,330 /sec

122 page-faults # 75,469 /sec

6 624 435 300 cycles # 4,098 GHz (83,35%)

978 640 608 stalled-cycles-frontend # 14,77% frontend cycles idle (83,51%)

1 889 970 907 stalled-cycles-backend # 28,53% backend cycles idle (83,00%)

6 929 942 620 instructions # 1,05 insn per cycle

# 0,27 stalled cycles per insn (83,24%)

1 419 225 173 branches # 877,930 M/sec (83,37%)

8 646 227 branch-misses # 0,61% of all branches (83,53%)

1,772973499 seconds time elapsed

0,000000000 seconds user

1,613176000 seconds sys

It’s faster than before and as fast as vanilla cp.

The problem is, of course, i don’t know why it’s faster. All these calls should have been optimized away and it more perplexing that just getting the method calls outside the loop failed. I suspect the two are connected somehow, but i haven’t looked at how or why yet.

I’ll look at the sources more closely and try to make sense of some assembly dumps, but if you have any idea what is happening, please drop me a line on Mastodon.

Edit 1:

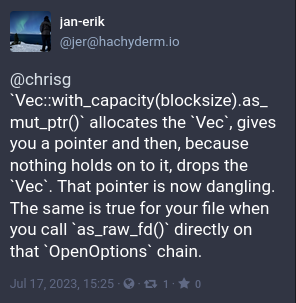

I had some help figuring out why the error happens if the as_ methods are called outside the loop. And when i say “had some help” i mean that it was explained to me:

(source)

So the following works just fine.

fn copy_with_read_write(source_path: &PathBuf, target_path: &PathBuf, blocksize: usize) {

let source = OpenOptions::new().read(true).open(source_path).unwrap();

let target = OpenOptions::new()

.write(true)

.truncate(true)

.create(true)

.open(target_path)

.unwrap();

let mut buf = Vec::with_capacity(blocksize);

let ptr = buf.as_mut_ptr();

let src = source.as_raw_fd();

let trgt = target.as_raw_fd();

unsafe {

libc::posix_fadvise64(src, 0, 0, POSIX_FADV_SEQUENTIAL);

}

let mut last_read = -1;

while last_read != 0 {

last_read = unsafe { read(src, ptr, blocksize) };

unsafe {

write(trgt, ptr, last_read as size_t);

};

}

}

Edit 2:

I sort-of-solved the performance “problem”. It’s not real, it was just larger variance than i expected. I did assembly dumps with the as_ methods in the loop and outside, and they are identical, down to the last instruction. So there is no difference apart from something messing up my measurements. It was pretty close to begin with.

Here is the final version of the sync copy method. When you watch the next video, you’ll know why it looks like the way it does. Or, you saw the video and got to this post to figure out why it looks like this, so hey, welcome, thanks for watching :)

pub fn copy_with_read_write(source_path: &PathBuf, target_path: &PathBuf, blocksize: usize) {

let source = OpenOptions::new().read(true).open(source_path).unwrap();

let target = OpenOptions::new()

.write(true)

.truncate(true)

.create(true)

.open(target_path)

.unwrap();

let mut buffer = Vec::with_capacity(blocksize);

// We must keep the originals in scope so they don't get dropped and the references

// become dangling, hence these three variables.

let source = source.as_raw_fd();

let target = target.as_raw_fd();

let buffer = buffer.as_mut_ptr();

unsafe {

libc::posix_fadvise64(source, 0, 0, POSIX_FADV_SEQUENTIAL);

}

let mut last_read = -1;

while last_read != 0 {

last_read = unsafe { read(source, buffer, blocksize) };

unsafe {

write(target, buffer, last_read as size_t);

};

}

}